Gwen Ottinger, Drexel University

(This article was originally published in the May 2017 edition and is re-published here alongside the new fully translated version.)

Almost two years ago, colleagues and I began an experiment in infrastructuring. Our working group of social scientists, programmers, environmental justice activists, and residents of “frontline” communities set out to create web-based tools that would help people make sense of, and make use of, large volumes of publicly available ambient air quality data. In our work together, I’ve learned first hand four lessons about information technologies and their use in everyday life that confirm the findings of social science researchers–and yet bear repeating for those striving not only to create new information technologies, but also to ensure that the technology actually functions to make facts matter in environmental justice campaigns.

The Meaning from Monitoring project was inspired by the work of activists in towns next to oil refineries in the San Francisco Bay area. In 1995, residents of Crockett and Rodeo, California, pressured their refinery neighbor (then Unocal, now Phillips 66) to install a state-of-the-art ambient air monitoring system for toxic gases. It was the first of its kind, developed with significant technical input from community members, and served as a model for nearby Benicia (next to a Valero refinery) and Richmond (home to a Chevron refinery). Both towns subsequently won their own fenceline monitoring programs–Benicia from 2008 to 2012, and Richmond in 2013, a system that Chevron, like Phillips 66, continues to operate today. These communities’ collective efforts also led the Bay Area Air Quality Management District (BAAQMD) to adopt a rule in 2016 requiring all 5 refineries in its jurisdiction to set up fenceline monitoring programs.

“Our infrastructuring project responded to what we saw as untapped potential in the data generated by these monitors. The data are publicly available, yet little used.”

Our infrastructuring project responded to what we saw as untapped potential in the data generated by these monitors. The data are publicly available, yet little used. While residents may look to the monitors’ website when they see a flare or smell something unusual, they haven’t folded the data into their campaigns against refinery permits and for new regulatory requirements. Nor have researchers used the data to learn more about regional air quality or environmental health.

There were clear infrastructural reasons for the relative neglect of fenceline monitoring data: data wasn’t easily downloadable, and the website emphasized the immediate situation without presenting a longer term view. The goal of Meaning from Monitoring, then, was to create an infrastructure that would make the data more usable and more strategically useful for communities concerned about their exposures to toxins.

Since our initial participatory design workshop in April 2016, we have created a new website that enables users to explore current and historical data, set up a mailing list for daily reports on unusually high levels of pollution, and deployed an app through which residents can report noxious odors to be presented on the website, alongside monitoring data. (Credit for this act of creation goes first and foremost to Amy Gottsegen, an undergraduate studying computer science at Drexel University, who did all the programming necessary for these tools under the supervision of Randy Sargent, Senior Systems Scientists at Carnegie Mellon University’s CREATE Lab.)

Now that we have a working set of tools, however, their limitations are becoming obvious. Potential users are confused by the relationship between the website and the app, for example. What’s more, we find that our website is also not being used–and the potential of the data remains untapped.

Our tools are admittedly still new, and as yet advertised only among people active in refinery-related environmental activism. Yet the limited uptake among some of our most likely users suggests that usage will almost certainly be our key challenge in the months ahead. As we struggle to account for low rates of use and develop strategies for expanding our user base, my first temptation is to scrutinize our design decisions and participatory processes, looking for where we went wrong, where we failed to hear or give appropriate weight to community input, where we missed the opportunity to create a site that would be relevant, intuitive, and useful.

“What deserves scrutiny is my initial expectation that we could create a suite of tools that was capable, in itself, of meeting the complex needs of potential users of air monitoring data.”

But in fact, I think what deserves scrutiny is less our process and more my initial expectation that we would–that we even could–create a website or app or even a suite of tools that was capable, in itself, of meeting the complex needs of potential users of air monitoring data. My expectations stemmed from a naive view of how technology–information infrastructure in particular–is made, and how it becomes part of social practice.

Lesson #1: Infrastructures are not created from scratch.

Before we began, I had imagined that we would be building a website from the ground up. That’s not how it worked, for two big reasons. First, code is easier and quicker to create when it’s adapted from other code, and on my budget–which, as part of an National Science Foundation-funded grant, was substantial but not unlimited–a developer would have to rely heavily on pre-existing site designs and information architectures.

Second, existing sites were an important resource for participants in the design process trying to envision what a more useful website could look like. Out of the various mock-ups and potential designs that the project team assembled for the design workshop, community participants strongly preferred the one fully implemented example, The Shenango Channel, in large part for the powerful visual statement made by the site, which integrates monitoring data, map, and time-lapse photography of the (now-shuttered) Shenango Coke Works. The Shenango Channel, developed by the CREATE Lab in collaboration with Allegheny County Clean Air Now (ACCAN), thus became a model for our own site.

Lesson #2: New infrastructures inherit the strengths and limitations of old ones.

In the months leading up to the design workshop, Drexel University undergraduate Nicholas Brooks worked with me and Intel Labs colleagues Dawn Nafus and Richard Beckwith to characterize the current state of web infrastructures for collecting and displaying data about conditions in fenceline communities. What Nick found, in short, was that one category of existing sites presented quantitative information (e.g. air monitoring data) to the public without offering means for the public to contribute their own observations. The website on which Rodeo and Richmond fenceline monitoring data originally appeared, Fenceline.org, falls into this category, as do sites maintained by the United States Environmental Protection Agency, such as AirNow. A second category of website, including the Louisiana Bucket Brigade’s iWitness Map and the sites in the California-based IVAN reporting network, allow people to report their observations and experiences of pollution, but are not integrated with quantitative data. Sites that did integrate quantitative and qualitative data or, to think of it another way, that allowed for two-way communication from monitor operator to affected resident, and affected resident to responsible authority, were both unusual and not fully realized in one dimension or the other, in the two cases we did find: The Shenango Channel and LACEEN. (At the time, the Shenango Channel’s reporting function still required a bit of manual labor to integrate community reports into the website; LACEEN’s monitoring data was yet to be integrated with its better-developed reporting.)

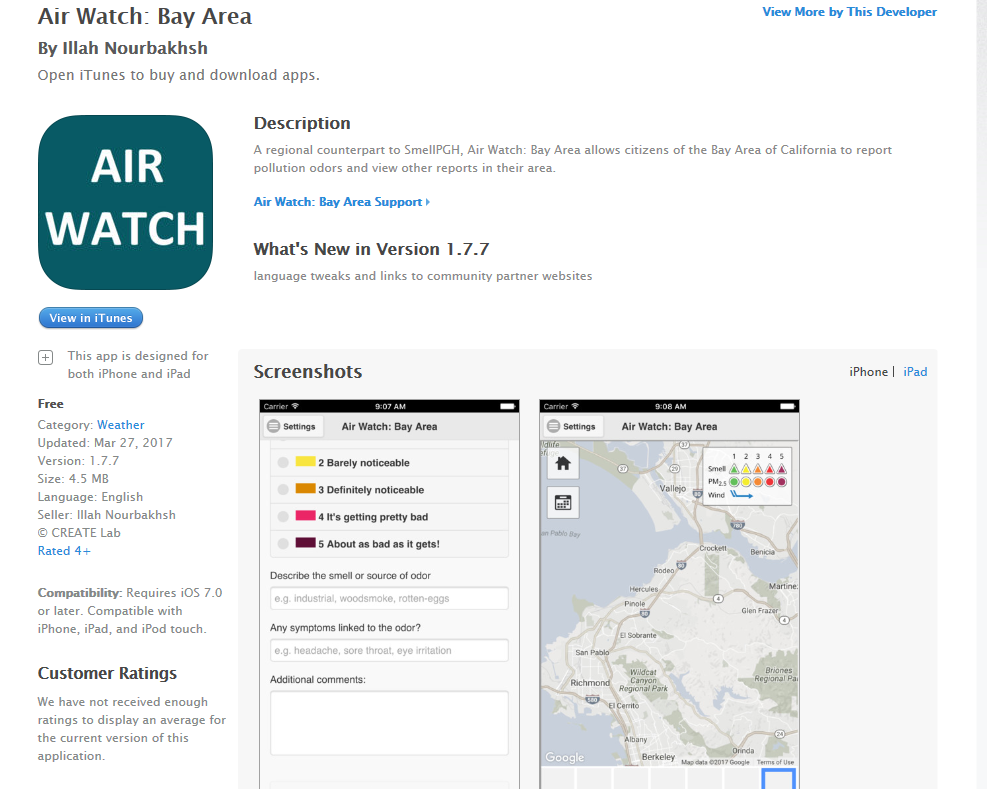

Having noted the disconnect, our site aspired to better integrate these functions from the start. But, drawing as we were on existing infrastructures for which that integration was an unresolved challenge, we foundered on exactly the same point. After creating a Shenango Channel-like interface for viewing real-time fenceline monitoring data, we faced the challenge of incorporating some sort of reporting function. Borrowing from the iWitness Map or a comparable Ushahidi-based platform was one possibility; creating a Bay area version of the SmellPGH app, a successor to the Shenango Channel also developed by the CREATE Lab, was another. Neither would fold into our site in a way that would be seamless for users, and neither solved a central problem: how to not only collect residents’ reports about local impacts of pollution, but also relay them to BAAQMD and other local authorities?

Lesson #3: Deploying open source software requires tacit knowledge.

We chose to adapt the SmellPGH app for our project because it was the fastest route to reporting capacity for residents of our partner communities. This expediency stemmed in no small part from our access to the original app’s designers: because Amy was working on the Meaning from Monitoring project from the CREATE Lab, she was able to tap into the expertise of programmers there to understand what modifications needed to be made, and how to make them, in order to make the app work in another region. We also benefitted (and continue to benefit) from the CREATE Lab’s back-end infrastructure for storing the reports collected by the app(s).

Although the iWitness Map and the platform on which it is built is also open source, we did not have similar access to people who had hands-on experience with it and could advise us on the finer points of deployment. Choosing that route would have been analogous to trying to learn to bake bread by reading a recipe alone, in contrast to having a master baker standing beside you to point out when your dough had become “smooth and elastic” and how to tell the difference between risen and over-risen. We ended up with better bread, so to speak–a fully functional app that relays reports to the website, deployed in only about a month–at the expense of frustrating residents with multiple platforms (a website and an app) to navigate.

Lesson #4: Uptake of new technology depends on links with on-going, everyday practice.

When we began our work, all of the community groups and activist organizations that might have been making use of data from the real-time monitors were doing without and working around, precisely because the data were so inaccessible. Our aim was to make the data more accessible, and combine it with other streams of data, so that they wouldn’t have to continue to do without. Yet their success in working around also means there’s little pre-existing demand for the information our tools now offer. As a result, I see the biggest remaining challenge for the Meaning from Monitoring project not as creating the perfect design (though we will still be working out the obvious kinks in our current design). Rather, I think our big challenge is to work with the individuals and groups engaged in the on-going work of protecting communities from petrochemical pollution, to envision how fenceline monitoring data–and our suite of tools more generally–can help them accomplish their goals. Thinking through potential use cases with members of our working group, including Constance Beutel, Janet Callaghan, Kathy Kerridge, and Nancy Rieser, has already prompted us to create daily summaries that are easy to print, anticipating that they might become handouts at public meetings. More such conversations with a wide range of environmental, health, and social justice organizations in the Bay area will, I hope, not just create a user base for our website and app, but guide our future development decisions, as well.

“To participate in designing and implementing the website, I had to set aside my analyst hat and accept an optimistic way of thinking about building resources and infrastructure for environmental health and justice campaigns.”

As important as these four lessons are are to my understanding of how to move the Meaning from Monitoring Project forward, they will hardly be news to anyone with a background in Science and Technology Studies (STS). As a an STS scholar, these are all things I should have known–and, in fact, at some level did know–in advance. Lessons #1 and 2 paraphrase Leigh Star and Karen Ruhleder’s influential work on infrastructure; Lesson #4 not only resonates with Star and Ruhleder’s findings but could also be seen as a restatement of the “quandary of the fact-builder” that Bruno Latour describes in Science in Action. And Lesson #3 is but a short extension of a long tradition in STS, showing the importance of tacit knowledge, especially in laboratory practice, as an essential element of knowledge-making–and surely I’m not the first to apply the concept to open source software.

Why, then, do these feel like such revelations in the context of the Meaning from Monitoring project? To participate in designing and implementing the website and other tools, I had to set aside my analyst hat for a while and accept, relatively uncritically, an optimistic way of thinking about building resources and infrastructure for environmental health and justice campaigns. In this way of thinking, new technology is good for communities living on the frontlines of petrochemical pollution. They are, without question, underserved by technology: monitors are scarcer there, most websites are not designed with their residents in mind, and more affordable smartphones with more limited storage and RAM may hamper the use of additional apps. Fighting for access to appropriate technology is part and parcel of environmental justice struggle, and finding funding to create new monitors, for example, or to even attempt a participatory design project is a victory in itself.

Now that there is a prototype, though, insights from STS can re-emerge. They help make sense of where the project is, and why, and they bring into focus the subtler points of how to ensure the technologies we have created are really effective in community contexts. As we move forward, the challenge will be to turn the heightened awareness that new technology is always constrained by old into strategic design modifications and, working from the knowledge that how a technology is used depends on how properties of its design are given meaning in practice, to collaborate with potential users on new visions for how their practices can be enriched by monitoring data.

(Featured image ‘Shell Refinery in Martinez, California’, credit: Gwen Ottinger and all other images are screen shots of the Air Watch website taken on 16/05/2017)